One of the critical factors in SQL Server’s performance is how it manages memory. Since disk I/O is orders of magnitude slower than RAM, SQL Server employs a buffer management system to reduce physical reads and writes. The buffer manager ensures frequently accessed data remains in memory, dramatically improving response times for queries and transactions.

For beginners, buffer management may appear as a background detail, but in high-performance and enterprise environments, understanding and tuning the buffer cache can be the difference between an efficient database and a bottlenecked system. This article explores SQL Server buffer management, explaining how it works, why it matters, and how to optimize it for maximum throughput.

The Buffer Manager Explained

In SQL Server, the buffer manager is responsible for handling pages of data between disk storage and memory.

- Page Size – SQL Server stores data in 8KB pages. Each page can contain rows of a table, index entries, or system metadata.

- Buffer Pool – When a page is read from disk, it is copied into RAM and placed in the buffer pool within digital transformation. Subsequent reads fetch the page directly from memory instead of reloading it from disk.

- Cache Lifecycle – Pages remain in the buffer pool until memory pressure requires them to be evicted. If modified (dirty pages), they must be written back to disk before eviction.

This mechanism reduces costly disk I/O and provides the foundation for SQL Server’s performance.

Types of Pages in the Buffer Pool

The buffer pool can contain different page types, each serving a distinct function:

- Data Pages – Hold table and index rows.

- Index Pages – Store B-tree structures for efficient lookups.

- Text/Image Pages – Store large object (LOB) data such as text, images, or binaries.

- System Pages – Contain metadata for allocation and management.

Checkpoints and Dirty Pages

When a transaction modifies data, changes are made in memory first. These modified pages are called dirty pages. To persist changes and ensure recoverability, SQL Server periodically writes dirty pages to disk during a checkpoint process.

Checkpoints strike a balance between minimizing I/O and ensuring durability. If SQL Server crashes, only changes since the last checkpoint must be recovered from the transaction log.

Asynchronous I/O

To prevent queries from waiting while dirty pages are written back to disk, SQL Server uses asynchronous I/O. A background thread writes dirty pages to disk while allowing other queries to continue. This design improves concurrency and responsiveness.

Monitoring Buffer Usage

SQL Server provides several tools for monitoring buffer management:

- Dynamic Management Views (DMVs)

sys.dm_os_buffer_descriptors– Shows all pages currently in memory.sys.dm_os_performance_counters– Provides metrics on buffer cache hit ratio.

- Buffer Cache Hit Ratio

A critical metric representing the percentage of page requests satisfied from memory rather than disk. A healthy system typically maintains >95% hit ratio.

Optimizing Buffer Management

- Allocate Sufficient Memory

SQL Server’s buffer pool should be allocated enough memory to handle active datasets. Configuremax server memoryto prevent SQL Server from consuming all system RAM.

- Optimize Queries

Efficient queries reduce unnecessary page reads. Poorly written queries can thrash the buffer cache. - Use Proper Indexing

Well-designed indexes minimize full table scans, reducing pressure on the buffer pool. - Partition Large Tables

Partitioning ensures only relevant partitions are loaded into memory, conserving buffer space. - Monitor and Tune Workloads

Use Extended Events and DMVs to identify queries causing heavy I/O and tune them accordingly.

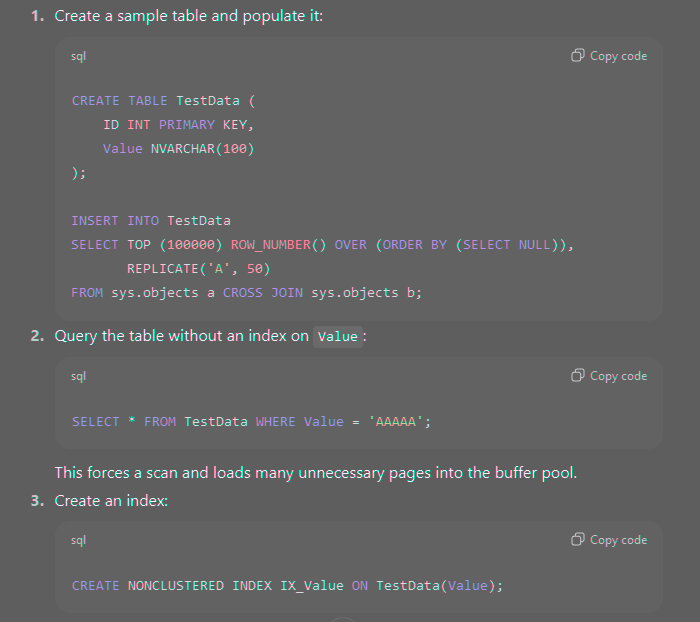

Example: Measuring Buffer Efficiency

- Run the query again and observe the execution plan.

SQL Server now performs an index seek, reducing I/O and buffer usage.

Buffer Pool Extension

For environments with memory limitations, SQL Server supports Buffer Pool Extension (BPE), allowing SSD storage to be used as an extension of memory. While not as fast as RAM, BPE can significantly improve performance on systems constrained by physical memory.

Best Practices

- Keep Databases Clean – Archive unused data to reduce working set size.

- Set Memory Limits – Prevent SQL Server from starving the OS by configuring

max server memory. - Monitor Regularly – Use tools like Performance Monitor, Query Store, and DMVs to track buffer usage.

- Avoid Over-Indexing – Too many indexes can increase writes and consume buffer space unnecessarily.

- Use SSDs for TempDB – TempDB is heavily used for row versioning and sorting; placing it on fast storage reduces I/O pressure.

Hands-On Exercise

- Measure buffer cache hit ratio on your system.

- Run a large query without an index and observe the increase in I/O.

- Create an index and rerun the query.

- Compare buffer hit ratios before and after optimization.

This demonstrates the direct effect of indexing and query design on buffer efficiency.

Buffer management is one of SQL Server’s most critical internal processes. By intelligently caching frequently accessed pages, SQL Server minimizes disk I/O and delivers high performance. Administrators who understand buffer pool mechanics can make informed decisions about memory allocation, indexing, and query optimization.

Whether you are managing a small business database in the cloud or a large enterprise system, effective buffer management is the key to maintaining speed, efficiency, and reliability in SQL Server environments.