Modern organizations operate in complex data ecosystems. Business-critical information often resides in multiple systems — from transactional databases and spreadsheets to APIs and cloud platforms. To extract value, this data must be consolidated, cleansed, and transformed into a format suitable for big data analytics and decision-making.

Microsoft SQL Server Integration Services (SSIS) is a robust platform for building extract, transform, and load (ETL) workflows. It enables data professionals to automate movement, apply transformations, and integrate data from diverse sources. Whether you are a beginner building simple workflows or an enterprise architect orchestrating complex pipelines, SSIS provides the tools for scalable, repeatable, and efficient data integration.

This article explores the fundamentals of SSIS, its architecture, and practical steps for using it to automate workflows.

What is SSIS?

SQL Server Integration Services (SSIS) is a component of the SQL Server suite designed for:

- Data Extraction – Connecting to relational, flat file, and cloud data sources.

- Data Transformation – Cleansing, merging, aggregating, or reformatting data.

- Data Loading – Storing transformed data into SQL Server databases, data warehouses, or external targets.

- Workflow Automation – Scheduling tasks such as backups, file system operations, and data refreshes.

SSIS is built on a flexible design paradigm that allows users to visually orchestrate workflows in SQL Server Data Tools (SSDT) or build packages programmatically with tools for data visualization with NoSQL.

SSIS Architecture

SSIS is composed of several key components:

- Control Flow

- Defines the workflow of tasks and containers.

- Examples: Execute SQL Task, File System Task, Send Mail Task.

- Data Flow

- Handles the actual movement and transformation of data.

- Includes sources, transformations, and destinations.

- Connection Managers

- Define connections to data sources such as SQL Server, Oracle, flat files, or Excel.

- Event Handlers

- Trigger actions when specific events occur (e.g., logging on task failure).

- Package Deployment

- Workflows are encapsulated in packages, which can be deployed to the SSIS catalog, file system, or Azure Data Factory for execution and leverage AI in your data systems.

Building a Simple SSIS Package

Step 1: Launch SQL Server Data Tools (SSDT)

Open SSDT and create a new Integration Services Project.

Step 2: Define Control Flow

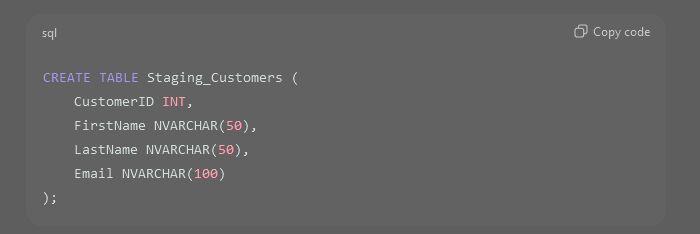

Drag and drop an Execute SQL Task into the Control Flow pane. Configure it to create a staging table.

Step 3: Configure Data Flow

- Add a Data Flow Task.

- Inside Data Flow, configure:

- Source: Flat File Source (CSV of customer records).

- Transformation: Data Conversion (e.g., converting text to NVARCHAR).

- Destination: OLE DB Destination pointing to

Staging_Customers.

Step 4: Execute the Package

Run the package in SSDT. Data from the CSV file will be extracted, transformed, and loaded into SQL Server.

Automating Workflows

SSIS supports automation through:

- SQL Server Agent

- Schedule packages to run at defined intervals.

- Useful for nightly data refreshes or ETL jobs.

- Event-Driven Execution

- Trigger workflows when files arrive in a directory.

- Example: Automatically load a file once uploaded to an FTP server.

- Dependency Chains

- Define execution order and conditions.

- Example: Run data validation tasks only if data load completes successfully.

Advanced Transformations in SSIS

- Lookup Transformation – Enrich incoming data by joining with reference tables.

- Merge Join – Combine two datasets into one.

- Derived Column – Create calculated columns during ETL.

- Conditional Split – Route data rows based on conditions.

- Fuzzy Lookup/Grouping – Handle approximate matches and clean duplicate data.

Best Practices

- Separate Staging and Production

Load data into staging tables first for cleansing and validation. - Use Transactions Wisely

Wrap critical operations in transactions to ensure atomicity, but avoid long-running transactions that lock resources. - Parameterize Packages

Use variables and parameters to make packages reusable across environments. - Implement Logging and Error Handling

Configure event handlers to log failures and redirect problematic rows to error tables. - Optimize Performance

- Use batch commits for large loads.

- Minimize transformations in the Data Flow where possible.

- Pre-sort data when using Merge operations.

Example: Automating a Daily Sales Load

Business Case: A retailer receives daily sales files from stores. These need to be consolidated into a central SQL Server database.

SSIS Workflow:

- File System Task – Move raw files to an archive directory.

- Data Flow Task – Load each sales file into a staging table.

- Execute SQL Task – Apply business rules and insert validated rows into the Sales fact table.

- Send Mail Task – Notify stakeholders of job completion.

Scheduled via SQL Server Agent, this workflow ensures consistent, automated daily data ingestion.

Integration with Cloud

SSIS packages can run on-premises or in the cloud technology. Using Azure Data Factory (ADF), packages can be lifted and executed in the cloud without modification, providing scalability and hybrid integration capabilities.

Hands-On Exercise

- Create an SSIS project in SSDT.

- Configure a Flat File Source for a sample CSV.

- Use a Derived Column transformation to concatenate FirstName and LastName.

- Load the results into a SQL Server table.

- Schedule the package using SQL Server Agent.

This exercise introduces the entire lifecycle: design, transform, load, and automate.

SSIS remains a cornerstone of Microsoft’s data integration strategy, offering a flexible and scalable way to move and transform data. From simple CSV imports to enterprise-grade ETL pipelines, SSIS equips organizations to automate workflows, improve data quality, and ensure timely delivery of insights.

For beginners, SSIS offers a graphical entry point into ETL concepts. For enterprises, it provides the robustness needed to handle complex, mission-critical data integration scenarios. By mastering SSIS, you establish a foundation for automation, scalability, and future readiness in your data ecosystem.